In contrast to humans, neural networks tend to quickly forget previous tasks when trained on a new one (without revisiting data from previous tasks).

Read More

Researcher in computer vision, machine learning and multimedia (Universidad Autónoma de Madrid)

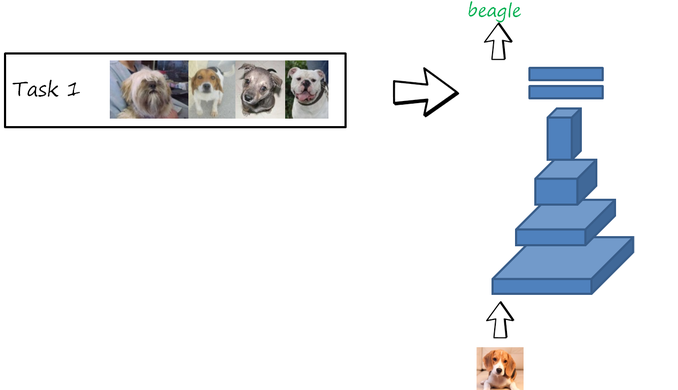

In contrast to humans, neural networks tend to quickly forget previous tasks when trained on a new one (without revisiting data from previous tasks).

Read More

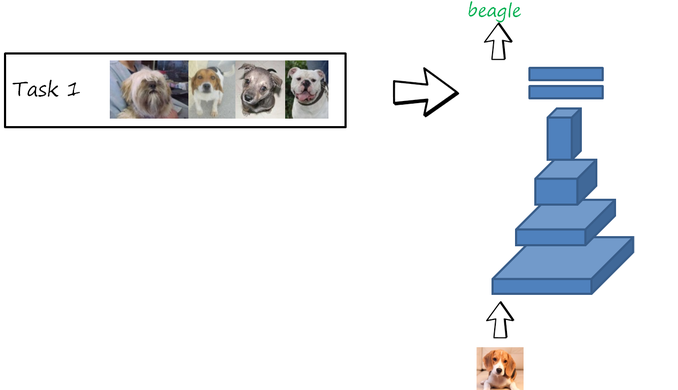

Yet another post about generative adversarial networks (GANs), pix2pix and CycleGAN. You can already find lots of webs with great introductions to GANs (such as here,

Read More

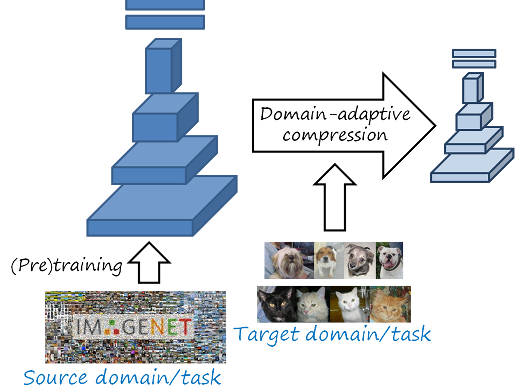

Network compression and adaptation are generally considered as two independent problems, with compressed networks evaluated on the same dataset, and domain-adapted or task-adapted networks

Read More