Category: continual learning

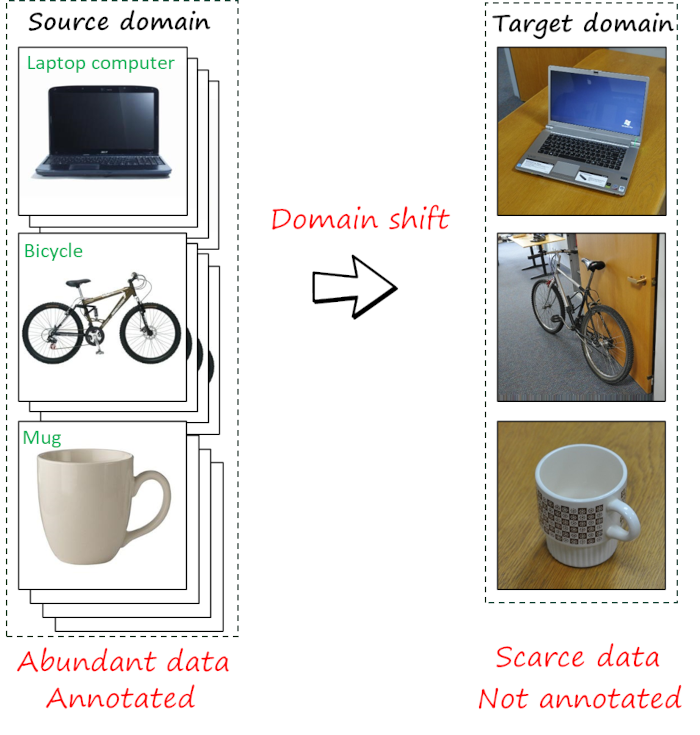

Source-free unsupervised domain adaptation

Can we perform unsupervised domain adaptation without accessing source data? Recent works show that it is not only possible but ...

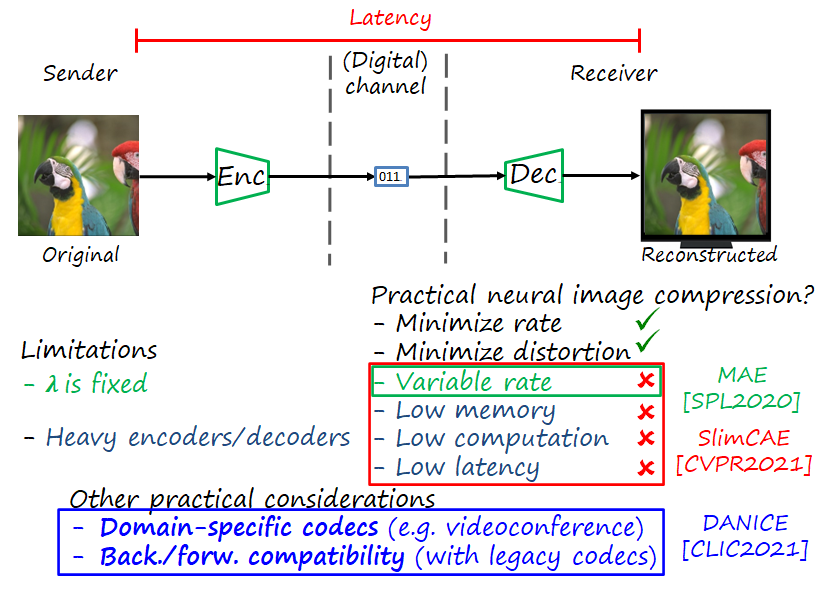

MAE, SlimCAE and DANICE: towards practical neural image compression

Neural image and video codecs achieve competitive rate-distortion performance. However, they have a series of practical limitations, such as relying ...

MeRGANs: generating images without forgetting

The problem of catastrophic forgetting (a network forget previous tasks when learning a new one) and how to address it ...

Rotating networks to prevent catastrophic forgetting

In contrast to humans, neural networks tend to quickly forget previous tasks when trained on a new one (without revisiting ...